bagging machine learning explained

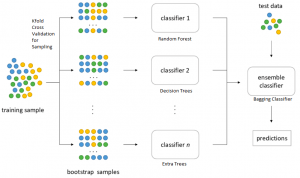

The research work explained the organization and the procedure of many machine learning approaches utilized for the purpose of filtering email spams. Random forests are a modification of bagged decision trees that build a large collection of de-correlated trees to further improve predictive performance.

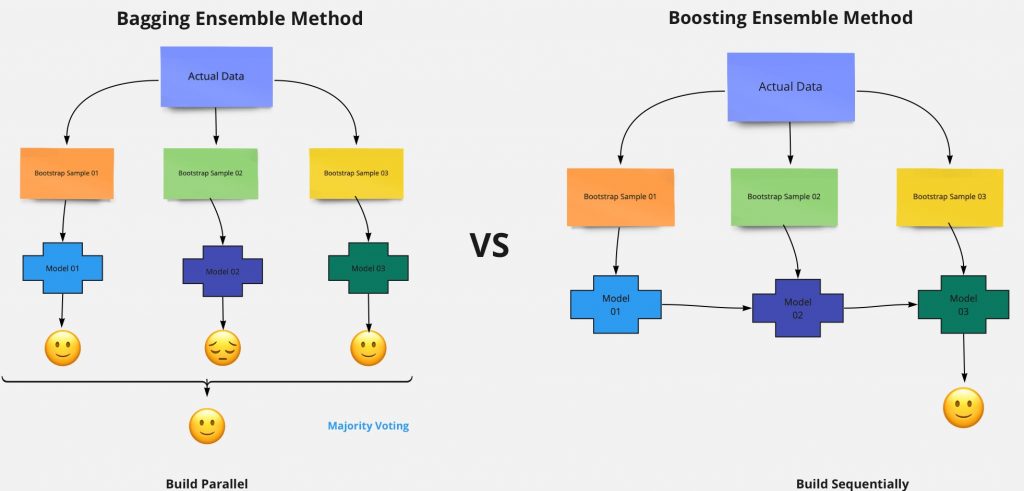

What Is The Difference Between Bagging And Boosting Kdnuggets

Every method is explained in a comprehensive intuitive way and mathematical.

. A popular one but there are other good guys in the class. How to draw or determine the decision boundary is the most critical part in SVM algorithms. The box plot contains the upper and lower quartiles so the box basically spans the.

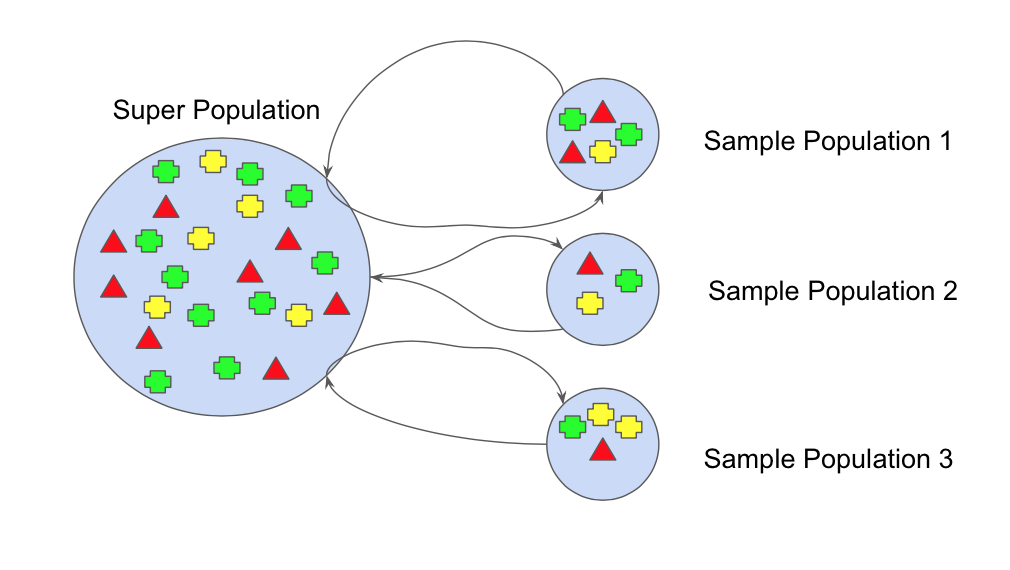

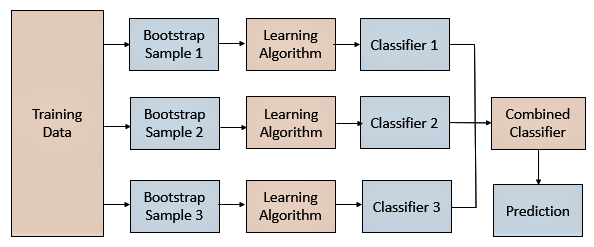

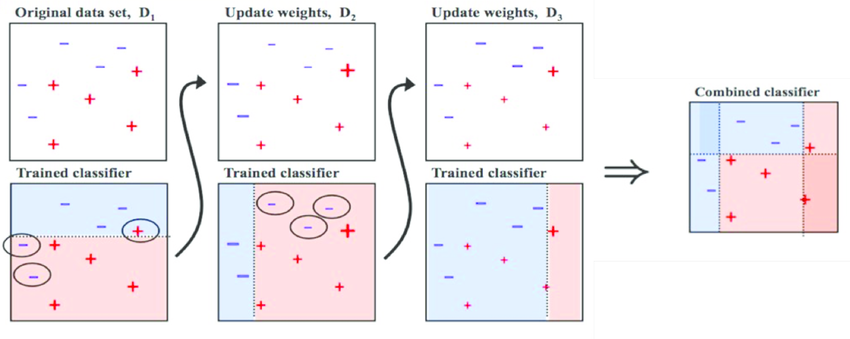

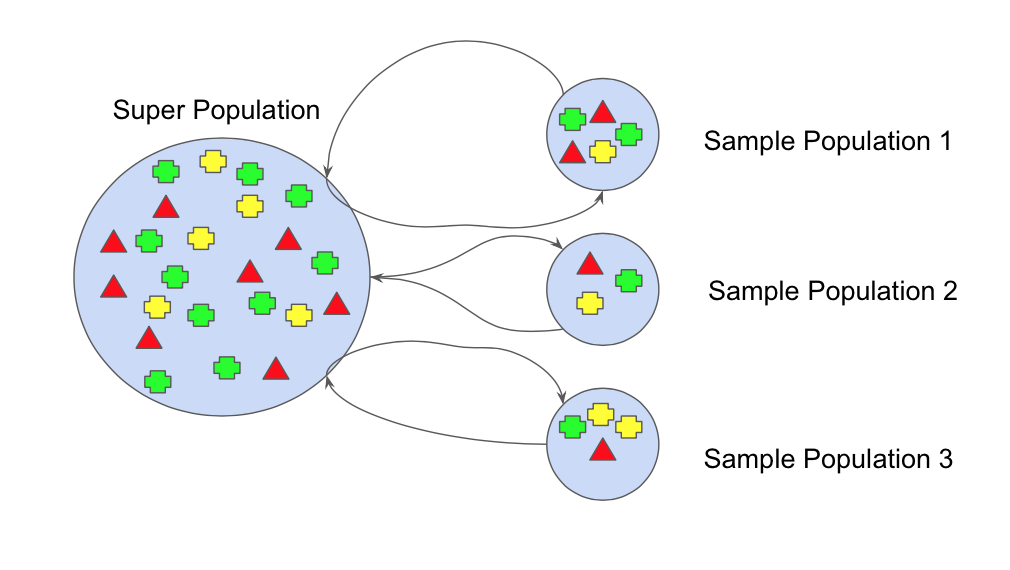

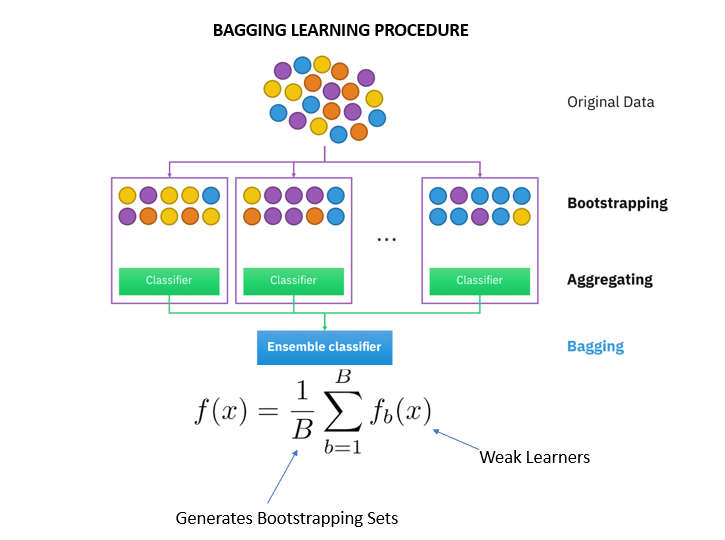

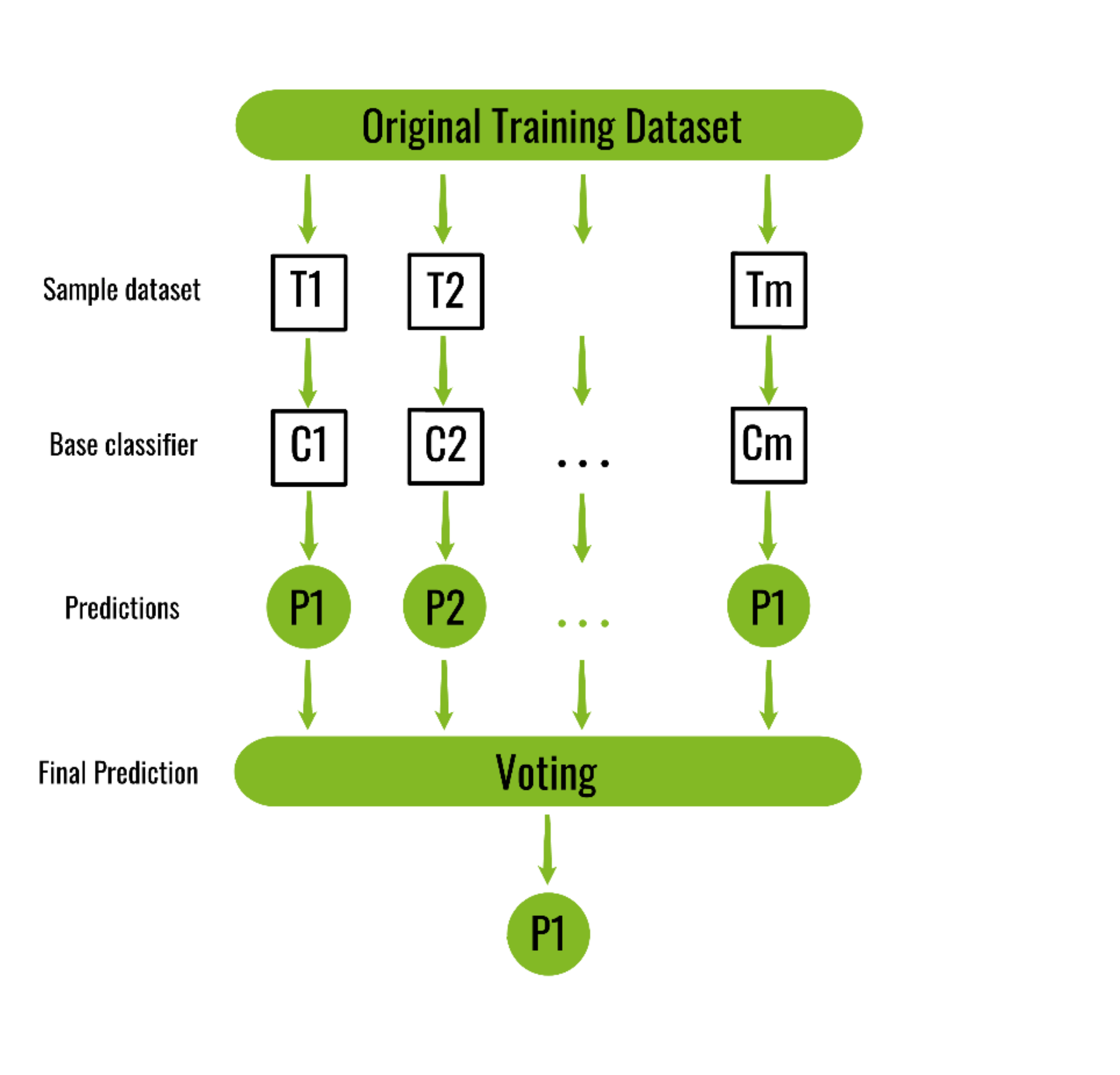

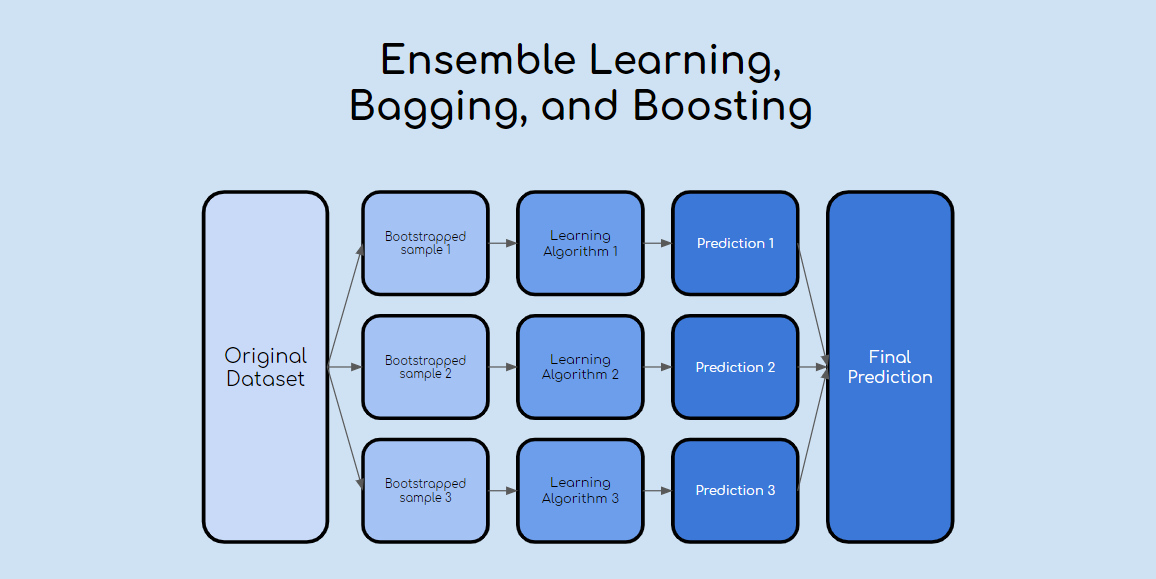

The cost function involves evaluating the coefficients in the machine learning model by calculating a prediction for the model for each training instance in the dataset and comparing. In Section 242 we learned about bootstrapping as a resampling procedure which creates b new bootstrap samples by drawing samples with replacement of the original training data. Neural Networks are one of machine learning types.

A box plot represents the distribution of the data and its variability. Arthur Samuel a pioneer in the field of artificial intelligence and computer gaming coined the term Machine LearningHe defined machine learning as Field of study that gives computers the capability to learn without being explicitly programmed. The following methods can be used to screen outliers.

An important part but not the only one. Linear regression is a machine learning algorithm based on supervised learning which performs the regression task. The evaluation of how close a fit a machine learning model estimates the target function can be calculated a number of different ways often specific to the machine learning algorithm.

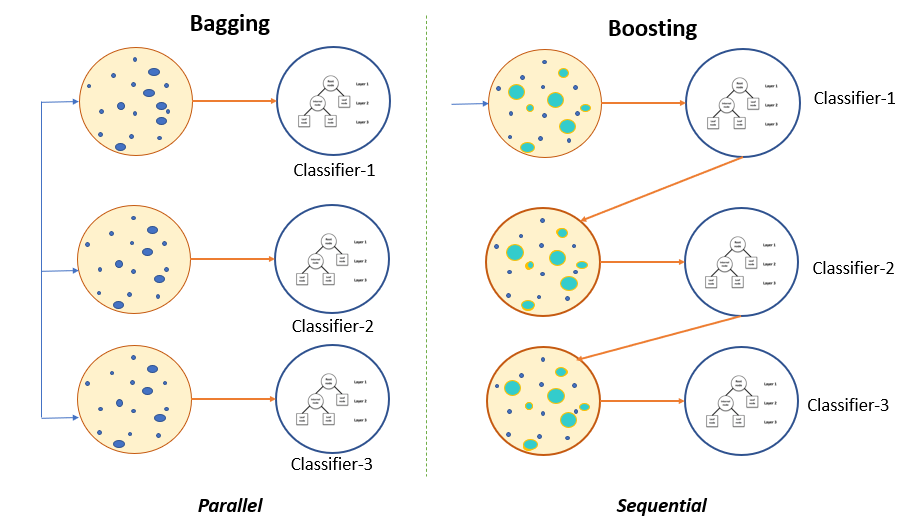

This chapter illustrates how we can use bootstrapping to create an ensemble of predictions. High Variance Less than Decision Tree and Bagging Bias variance calculation example. They have become a very popular out-of-the-box or off-the-shelf learning algorithm that enjoys good predictive performance with relatively little hyperparameter tuning.

Support Vector Machine SVM is a supervised learning algorithm and mostly used for classification tasks but it is also suitable for regression tasks. Chapter 11 Random Forests. In this post you will discover the Bagging ensemble algorithm and the Random Forest algorithm for predictive modeling.

After reading this post you will know about. A machine learning engineer who is interested in democratizing machine learning and deep learning. In a very layman manner Machine LearningML can be explained as automating and improving the learning process of.

SVM distinguishes classes by drawing a decision boundary. Lets put these concepts into practicewell calculate bias and variance using Python. Simple linear regression is a target variable based on the independent variables.

Random Forest is one of the most popular and most powerful machine learning algorithms. Deep Learning is a modern method of building training and using neural networks. High Variance Less than Decision Tree Random Forest.

How would you screen for outliers and what should you do if you find one. The simplest way to do this would be to use a library called mlxtend machine learning extension. Have helped many researchers with their machine learning-related research and helped many organizations embedding machine learning into the system and developing artificial intelligence-powered.

Bagging Boosting Machine Learning Interview Questions Edureka. It is a type of ensemble machine learning algorithm called Bootstrap Aggregation or bagging. A brief study on E-mail image spam filtering.

This book covers various essential machine learning methods eg regression classification clustering dimensionality reduction and deep learning from a unified mathematical perspective of seeking the optimal model parameters that minimize a cost function. Bootstrap aggregating also called bagging is one of the first ensemble algorithms. Basically its a new architecture.

Machine Learning is a part of artificial intelligence. However the review did not cover recent research articles in this area as it was published in 2008 and comparative analysis of the different content filters was also missing. By making it available for everyone at a relatively low price.

Polynomial regression transforms the original features into polynomial features of a given degree or variable and then apply linear. Nowadays in practice no one separates deep.

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Ensemble Learning Bagging Boosting

Bagging And Boosting Explained In Layman S Terms By Choudharyuttam Medium

Bagging Ensemble Meta Algorithm For Reducing Variance By Ashish Patel Ml Research Lab Medium

Ensemble Learning Explained Part 1 By Vignesh Madanan Medium

Bagging Bootstrap Aggregation Overview How It Works Advantages

Boosting And Bagging Explained With Examples By Sai Nikhilesh Kasturi The Startup Medium

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Ensemble Learning Bagging And Boosting By Jinde Shubham Becoming Human Artificial Intelligence Magazine

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

What Is Bagging In Machine Learning And How To Perform Bagging

Mathematics Behind Random Forest And Xgboost By Rana Singh Analytics Vidhya Medium

Ml Bagging Classifier Geeksforgeeks

Ensemble Learning Bagging And Boosting Explained In 3 Minutes

Ensemble Learning Bagging Boosting Stacking And Cascading Classifiers In Machine Learning Using Sklearn And Mlextend Libraries By Saugata Paul Medium

How To Create A Bagging Ensemble Of Deep Learning Models By Nutan Medium